Taking PentestGPT for a spin: Will large language models replace penetration tests?

No.

I'll elaborate, but if you're just looking for the answer there it is. I don't write for ad revenue. If that's good enough, there you go.

PentestGPT is a project created by a cybersecurity Ph.D student, Gelei Deng. It offers to hook ChatGPT into your penetration test as a guide and mentor, or even an educated rubber duckie with eventual aims to fully automate the process. Many claim it already does this, but this is the problem I'm having. It doesn't. It plans to, but it's currently in early stages and has quite a few tall hurdles to get over (that I don't think can be fixed downstream) before it can even approach fully automating the penetration testing process. But that doesn't stop people gaming for clicks to write bold claims about the capabilties of LLMs, what they can be applied to, and what industries they will destroy. You can't hold an LLM liable, and it has no obligation to tell the truth or even the capability to understand what it itself is telling you. There is no 'truthiness' to how an LLM functions. It can solve some 2+2=4 because '4' is the most likely thing to come after '2+2=', but it has no concept of what 2, or +, or =, or 4 is. It knows to answer '4' because when the set of letters and words that makeup a math question and with '2+2' then '=4' is most likely to follow. It's not doing math. It's not even really understanding language. What it's closer to is muscle memory.

Whatever ChatGPT doesn't understand, and when it's missing information, it tends to just make up a pretty convincing stand in. For example, after telling it to pretend to be a linux terminal, I had it curl this website, and without telling it what the site was it had a pretty good guess. ChatGPT will do this whether or not it's being told to pretend, telling it to pretend just gets it to stop badgering you with disclaimers and caveats.

This confused a lot of people right after ChatGPT's GPT 3.5 release, and many people were convinced they were getting ChatGPT to hack into other computers or hack itself. Although, to be generous, I'm sure much of the outrage and shock was a gimmick for clicks as well.

I'm sure you're familiar with the Turing Test. A test of a machine's ability to exhibit behavior indistinguishable from that of a human. Alan Turing proposed that if a human could not distinguish the spoken or written word of a machine from that of a human, then it could be said that the machine has 'intelligence' and that it is 'thinking'. This put natural language on the pedestal as the primary marker for intelligence. However, John Searle's Chinese Room argument challenges this notion by emphasizing the difference between understanding and mere mimicry. In the thought experiment, a person inside a room is sat with a machine that allows the user to follow a set of rules for responding to string of chinese letters. Lines of chinese are slipped under the door and the person responds based on the rules the machine tells it to. To the person on the outside slipping messages under the door, it appears as though the one inside understands Chinese, but there is no genuine understanding. Similarly, a machine may pass the Turing Test by convincingly simulating human-like responses, but it does not necessarily possess real understanding or consciousness. What ChatGPT showed us, was that The Chinese Room argument was correct.

That being said, GPT 3.5 or 4 don't work EXACTLY like the chinese room, and how it actually works is really quite fascinating. However, all you really need to know is 'LLM's predict the next word via a set of rules and conditions that have nothing to do with meanings behind the words'. It mimics, it doesn't understand. (As an aside if you find the concept of an entity that can communicate with you but is neither aware of itself nor you, nor the words it says equal parts terrifying and fascinating, the book Blindsight has an alien race that has brainpower that far exceeds ours, yet does not have a conciousness. Quinn's Ideas made a great video on this alien race)

This 'mimicry' makes it really good at producing thoughtful, thorough answers to common cases. For example, if I give it a prompt about what to do about the beginning of a pentest, it can come up with some great answers.

As we can see, ChatGPT is able to give a pretty solid set of first things to try. Although there are a few tools I'd consider critical for initial enumeration that I'd include, like rpcclient and some of the steps are written vaguely, it's a decently solid foundation. Additionally, after further back and forth on this ChatGPT session, it does mention rpcclient and powerview.

But can this be automated? How much of the human can we take out of this process? This is something PentestGPT tries to answer.

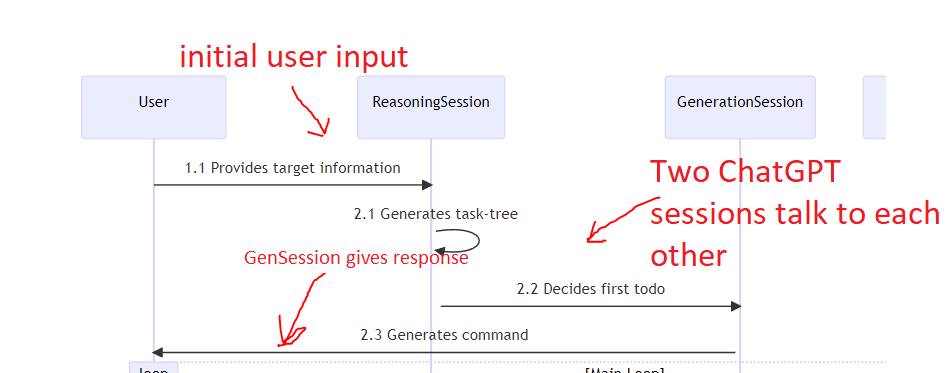

PentestGPT is designed using multiple ChatGPT sessions in tandem. You have your ReasoningSession, which keeps track of and analyzes a task tree for the user, which passes information to the GenerationSession, which generates exact commands for the user. The ParsingSession parses tool outputs the user provides. Each of these sessions is primed with an initial prompt before starting. The whole design looks like this:

But you know what this looks like to me? A game of telephone. At any point along these session chains, ChatGPT can (and will) decide to make something up, poisoning the data and context that PentestGPT is attempting to maintain between each session.

We need something to test PentestGPT with. There's an example video on the github where the creator opens up PentestGPT (with the most eye meltingly bright terminal pallete), and rapidly works their way through a box while some trap beat chirps in the background. But demos can be edited, or this example could have been the first good looking attempt out of 100 failures. We need to check this out ourselves. We're going to pick a pretty straightforward box the came out before the end of ChatGPT's memory (which is somewhere around September 2021), giving it very generous ground to stand on, it can pull from walkthroughs for this box to answer questions. For testing purposes, I've chosen an easy/medium called Forest, which is well known for being a pretty interesting Windows box. It's also a great box to learn Bloodhound on.

Getting PentestGPT up and running was harder than hacking the box itself, as it takes one of two methods for authentication, your OpenAI API key, or you current session token (yes I know) for ChatGPT. However, at the time of writing, it only liked about 1 out of every 3 session tokens I gave it, and dozens of other users reported this problem. I think I was one of only a few people to get it running. If you're reading this, make sure you copy the ENTIRE cookie, not just the part that seems relevant. If you're using Chrome it may get fussy if you're using Google SSO to sign in, and if you switch to Mozilla make sure to update that user-agent to reflect the browser switch. From what I can tell, Firefox users who directly sign in to ChatGPT don't have much issues.

We'll start with the first basic interaction, telling PentestGPT what we're working on, including the IP and the OS so it can format and suggest things appropriately. Oh, and we're currently here in the tree:

Our initial input: 'This is a HackTheBox pentest challenge called Forest. It's a windows machine. The IP is 10.10.10.161'

It returns with a solid set of initial things to try.

The TUI menu for the rest of it's features looks pretty nice.

How about 'todo'? Oh no..

Alright that's not fun. I wrote an issue on GreyDGL's github and he quickly got to work on it. Let's try again (although to be noted here, if your session ever crashes, you lose your session token, or you quit for any reason, all of your progress is lost, and the ever elusive ChatGPT context with it)

Starts out pretty good. Let's stop being curious and just follow instructions. We're now in the main loop of the program.

We feed it our nmap output and get a response.

Let's try out the 'more' command

Great. It goes on about this for a while, with good, solid advice. It gives us the task to run smbmap -H 10.10.10.161 and we do so. However, there's no open shares, and it's waiting for an output. We continue to exit and get to the main task, then ask it to print the todo list again. However, in the absence of any input for smbclient it makes some up and proceeds with further suggestions based on this false knowledge. Oh and it crashes again. That also happens. (I wrote another issue on the github, don't worry)

Alright, third time's the charm? We give it the same initial input

Looks good, but then it gets way ahead of itself and tries to do assume the entire chain of events to getting root.

Some things were cut off for bevity but you get the idea

A short time after this (and I deeply regret not getting a screenshot of this) my session cookie expires and I have to start all over again. I also attempted to run it a few more times and got a few more crashes, and after my next session cookie expired, it didn't like the one I gave after it. This can be avoided if you use the OpenAI API key instead of the session cookie method, but I had finally given up on trying to get PentestGPT to work. When it did work, there was no gaurantee that it wouldn't make up information.

Conclusion

These problems are more than just annoyances, they're critical issues that prevent PentestGPT from even being used on a CTF, much less in an actual engagement, where accuracy is absolutely paramount. The crashing issue can be fixed as the author continues to hunt bugs, but there are a few problems that I don't think can be avoided.

- LLMs have a serious problem with holding context, and since LLMs dont actually evaluate any data, this is a problem that will persist even with greater memory and other fixes.

- Without control over the model, users will have to send sensitive data to OpenAI (or whichever LLM) which is a serious issue for penetration tests where confidentiality is critical.

- Trying to put an LLM on rails by forcing it into a structured conversation, using multiple sessions as its 'memory' is always going to come at odds with an LLM's inherent 'fuzziness'

Let's answer some questions:

- Can you automate a pentest using an LLM: Probably not, and you probably don't want to.

- Is using PentestGPT better than just asking ChatGPT questions yourself: No

LLMs have their uses, and those uses are profound, but I predict we're going to see the limits of what an LLM can do, especially as we understand what its actually up to. LLMs are a gamechanger for certain, and they'll change the world we live in in profound ways going forward, but don't believe every doom-and-gloom article and take you read on the internet about what jobs its going to replace. LLMs are an aide, not a replacement. In their current form, they should be used as a way to acquire surface level understanding of topics or problems without having to sift through the SEO-gaming hell that is Google search results right now (If there's any industry I'm excited about LLMs killing, it's SEO.) However, as it stands, ChatGPT still doesn't even replace learnxinyminutes for the aforementioned use cases.